My neighbor is noisy

Don’t worry, I won’t discuss about my personal neighbors during the Covid-19 confinement. Lately, several of my customers had random and difficult-to-diagnose issues with their Cloud instances. As this is not related to a specific provider (some of them are using AWS while the others are using OVH Public Cloud), the root cause remains the same. Before going into more detail, I’ll give you a quick answer: their neighbors are noisy.

Protesters demonstrate against Donald Trump’s presidency during the campaign trail in 2016 (by AP)

Some quick reminder

Of course, you all know what the Cloud is, don’t you? So no, it’s not just your online storage, it’s not just your photo sharing. According to Wikipedia, as we’re discussing especially about Cloud Computing:

Cloud computing is the on-demand availability of computer system resources, especially data storage and computing power, without direct active management by the user. The term is generally used to describe data centers available to many users over the Internet. Large clouds, predominant today, often have functions distributed over multiple locations from central servers. If the connection to the user is relatively close, it may be designated an edge server.

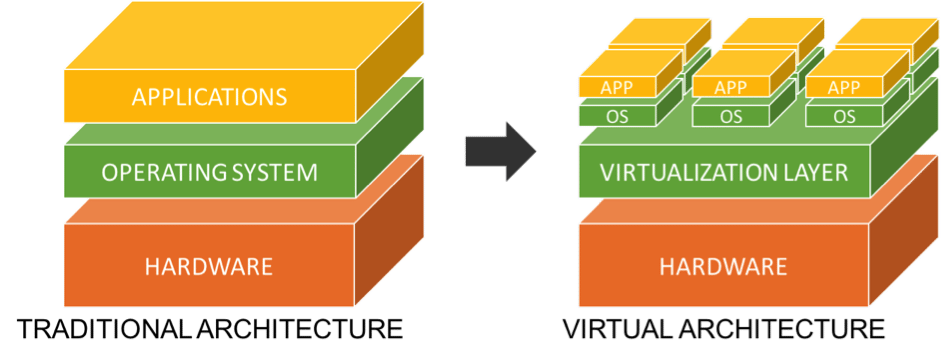

Technically, those are virtual resources, easily manageable. By virtual resources, I mean a segmentation of the physical machine into different instances, dedicated to different customers. All of this at the scale of entire data centers.

The virtualization provided is based on usual market solutions (VMware, kvm, …) with potentially management overlay, or home-made solutions. So we talk about hypervisor for the host machine, and instance for the virtual machine.

Virtual Architecture

The subject that interests us here is in itself neither new nor unknown to Cloud providers: they are all gradually releasing offers with “guaranteed performance” or “dedicated resources”. But why this?

Let’s go back to our virtual architecture: we see it as a segmentation of the host machine. Let’s replace our server by a cake and the instances by slices. So you can cut your cake into as many slices as you want, as long as there is cake. Therefore, to have more slices, you have to make them smaller. The same is theoretically true for virtualization.

Theoretically is the important word here: indeed, your providers bet that not all their customers were using all the resources allocated to their instances at the same time. They then decided to keep larger sizes for the instances than the host machine can handle. This is called overbooking.

Most of the time they are right. But the moments that make them wrong, are detrimental to you. This overbooking is predictable for you, customers, depending on the tariff of your provider: the cheaper it is, the higher the probability and the coefficient of overbooking. In addition to dedicated resources, some providers promise you an adequate infrastructure where storage would be the absolute key, through fully SSD-based storage. That’s good. But that’s not all.

My noisy neighbor is named…

Because of this overbooking, whether it’s naturally related to the mass of instances, or specifically due to a single instance (our Noisy Neighbor), the effect is the same: you have at a given time, less resources available than expected.

The first question I hear most often then is:

But how do you detect it?

This is the most difficult part for you because, in the middle of a run, this can manifest itself in various ways, with obvious signals only in some rare cases. The other signals will simply be the direct consequences on your own service.

On Linux, top can provide one of the obvious signal. Have you ever wonder what is the st value at the end of the second line ? To be conciese, st, for “steal time”, is only relevant in virtualized environments (your Cloud setup here). It represents time when the CPU, from the host, was not available to the current instance: it was stolen. If you want more details about this steal time, you can refer to this tech paper from IBM.

A recommendation to all my customers, when we work on their monitoring platforms, is to include behavior change and comparison tests. Do latency levels suddenly increase? Does the system have hangs without being loaded, and without I/O?

Monitoring room in China

On latency, for example, I remember one client where we worked on their content distribution services using nginx and doing some pretty advanced fine-tuning. From there, we launched tests and set up monitoring for each of the instances concerned. Very low latencies and high bandwidths, at all times, whatever the instance and the Cloud provider. Then one morning, this customer called me with a catastrophic call: all his European customers were complaining about abnormally long latencies when opening the hosted videos. The analysis tests went quite far:

- check the navigation timing values to determine what could generate these latencies: the TTFB increases abnormally while everything else is stable.

- only the platform hosted in France on one provider is impacted: no abnormal latency on other providers or regions

- no machine load (nor steal time - see above)

- no I/O

- low throughput of the concerned instances: 10 Mbps on average against several hundred for those with no worries

While we’re testing in all directions, with no configuration changes other than juggling the nginx debugging options, the service returns to normal. The next day, around the same time, the same client contacts me again in a panic over the same subject. Same analysis, same result, same return to normal.

After (too) long exchanges with the support of this provider, we finally got the information: another instance was behaving abnormally and was consuming a good part of the machine resources, in particular the network part (speed and number of connections). You said peer-to-peer for last summer’s family videos?

My neighbor moves me

On the subject of the “hang system”, it’s a little more vicious and specific. A client in the video industry, does massive transcoding using ffmpeg. Part of the work is done via CPUs (the smaller resolutions) while the higher resolutions (HD / 4K in mind) are done via GPUs. The setup works well except that regularly some live streams are disturbed in an incomprehensible way, exclusively from the CPU instances. After a long analysis, the only common point of all its interruptions, both in terms of chronology of events, behaviour and logs, is the “bridle” of ffmpeg to a single CPU thread actually used.

Delivery guy collecting a package

We therefore focused our analytical work on understanding what could cause this behavioural change on the part of ffmpeg. After different stress-tests of the instances, only one allowed to reproduce the behavior: the I/O stress-tests at the kernel level, causing system hangs.

At that time, my client remembered having vaguely seen some automated storage migration emails passing by the concerned provider, at the same time as the famous interruptions.

So yes, another important reminder:

Let me be!

The next question, they usually ask, is:

But how do you prevent it?

It all depends on your budget!

Large instance consumers allow themselves to test an instance when spawning it to make sure there are no noisy neighbors from the start. Tests are typically stress-tests specific to each instance template used, the results of which are compared to the average values of the instances considered “valid”. Automation of your entire infrastructure is key here.

This pre-use stage of the instance can quickly become expensive: some people have been surprised to see dozens of attempts before having a viable instance. Time being money in the Cloud world, …

A dog hunting

Most people implement corrective solutions. The monitoring (see above) is then interfaced with the automation tools and will restart a new instance to replace the one detected as disturbed.

This is the least expensive but can have two impacts depending on your service/infrastructure:

- a small delay before detection and/or relaunch

- a service interruption if your instance is not redundant or if your load-balancer is slow to detect its unavailability

Another solution would be to host your services on dedicated infrastructure when it makes sense, and consider Cloud services as overflow solutions.

Do you agree?

I remember an article explaining that it was the fault of your application and not of cloud hosting. We could go back to the eternal war between Dev and Ops, but these articles usually completely overlook the monitoring aspects and therefore the observability of what is going on. Their point of view is therefore valid, but not gospel.

On your side, have you ever been through these setbacks? How did you react?